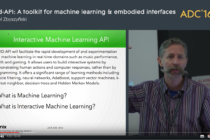

RAPID-MIX API: a toolkit for machine learning & embodied interfaces

At the JUCE Audio Developers Conference, 3-4 November 2016.

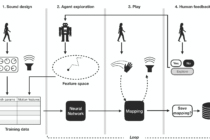

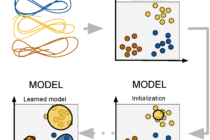

This lecture presents the newly-developed RAPID-MIX API, a comprehensive, easy to use toolkit and JUCE Library that brings together different software elements necessary to integrate a whole range of novel sensor technologies into products, prototypes, and performances. API users have access to advanced machine learning algorithms that can transform masses of sensor data into expressive gestures that can be used for music or gaming. A powerful but lightweight audio library provides easy to use tools for complex sound synthesis.

The RAPID-MIX API was created by the RAPID-MIX consortium, which aims to accelerate the production of the next generation of Multimodal Interactive eXpressive (MIX) technologies by producing hardware and software tools and putting them in the hands of users and makers. We have devoted years of research to the design and evaluation of embodied, implicit and wearable human-computer interfaces and are bringing cutting edge knowledge from three leading European research labs to a consortium of five creative companies.